Grafilab Whitepaper

- Getting Started

- Introduction

- Market Overview

- Challenges

- Goal

- What Are We Building?

- Grafi Cloud

- Grafi Co-Builder

- Grafi AI App Store

- Grafi AI Data Layer

- Ecosystem (Flywheel)

- Token Info

- Roadmap

Getting Started

Grafilab is building a decentralized AI ecosystem that empowers ordinary people to participate in AI development, deployment and commercialization. By offering tools like the CeDePIN Cloud, Co-Builder platform and App Store, all secured through our AI Data Layer, Grafilab enables users and developers to access, build, and monetize AI without deep technical expertise.

Introduction

Grafilab is a revolutionary AI ecosystem that empowers anyone, from ordinary users to developers, to participate in and benefit from AI innovation. Through our CeDePIN Cloud ,Co-Builder and Ai App-store platform, users can easily deploy, train and monetize AI app/agents without needing deep technical expertise. At the heart of Grafilab is our AI Data Layer, which ensures secure data integrity, ownership, and transparency, creating a trusted environment for AI development and commercialization. Grafilab is breaking down the barriers to AI accessibility and driving the evolution toward AGI.

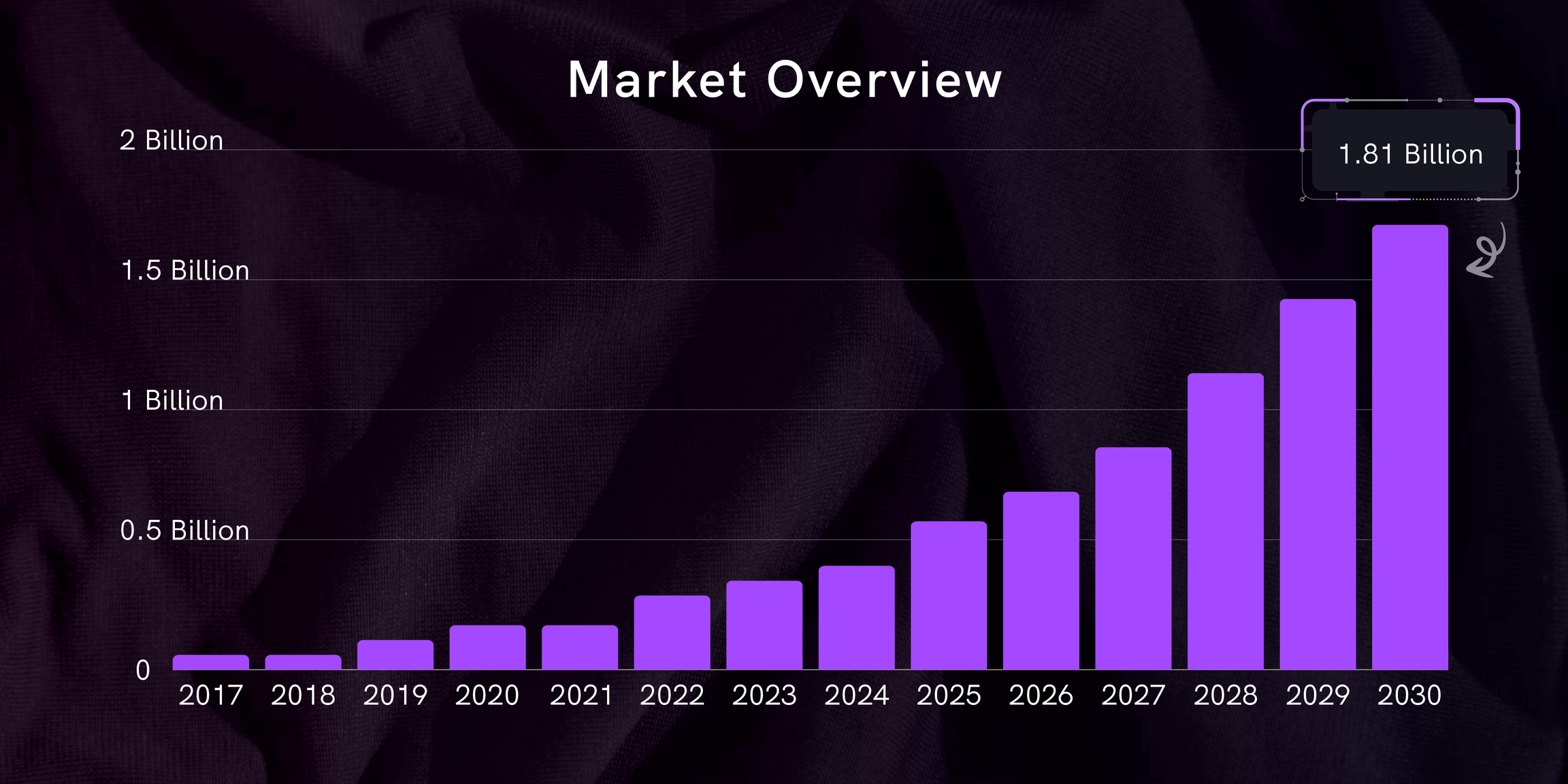

Market Overview

The global artificial intelligence market generated a revenue of USD 196.63 billion in 2023 and is expected to reach $1.81 trillion by 2030. A compound annual growth rate of 37.3% is expected of the worldwide artificial intelligence industry from 2024 to 2030. Key drivers of this growth include rapid advancements in machine learning, deep learning, and the adoption of AI-powered tools across diverse industries like healthcare, logistics, finance, and entertainment.

One of the most transformative trends in AI today is the rise of AI agents. These autonomous systems, capable of executing tasks and making decisions without constant human oversight, are being integrated into customer support, personal assistants, and enterprise-level automation. Companies are leveraging AI agents to enhance efficiency, reduce costs, and provide hyper-personalized user experiences. This trend highlights the need for scalable computing resources and advanced model training platforms.

Simultaneously, the evolution of generative AI, exemplified by OpenAI’s ChatGPT and similar models, has revolutionized content creation, marketing, and customer engagement. The integration of AI agents with generative AI capabilities further amplifies their utility, enabling creative problem-solving and dynamic interactions. These advancements underline the critical importance of accessible infrastructure and real-time data systems.

Looking toward the future, Artificial General Intelligence (AGI) remains a transformative milestone, with projections suggesting early achievements by 2030. AGI development is expected to drive unprecedented demand for computational power, secure data layers, and robust collaboration tools, creating a significant opportunity for innovative AI ecosystems.

Challenges

As the global AI industry is projected to grow to $1.81 trillion by 2030, the path to achieving Artificial General Intelligence (AGI) faces significant challenges:

AI Commercialization: AI models need an App Store to reach users and generate revenue. Grafi AI App Store provides a seamless AI-as-a-Service (AIaaS) platform for developers to monetize their work.

Barriers for Developers: Small teams and startups face high costs and limited access to tools. Our AI Co-Builder lets anyone build, deploy, and scale AI models using fractional GPUs.

Insufficient Compute Power: The existing infrastructure can’t keep up with the demand for AI. Our CeDePIN Cloud solves this by offering scalable, cost-effective compute resources.

Data Integrity, Ownership & Security: Data ownership and model integrity are critical. Our AI Data Layer ensures tamper-proof, verifiable records of AI development on-chain, protecting data rights and usage.

Goal

To drive the evolution of AGI

Our goal is to make AI development, deployment, and monetization accessible to everyone—regardless of technical expertise. By providing secure data environments through our AI Data Layer, and easy-to-use platforms like the AI App Store, Co-Builder, and CeDePIN Cloud, we empower users and developers to participate in and benefit from the AI revolution.

What Are We Building?

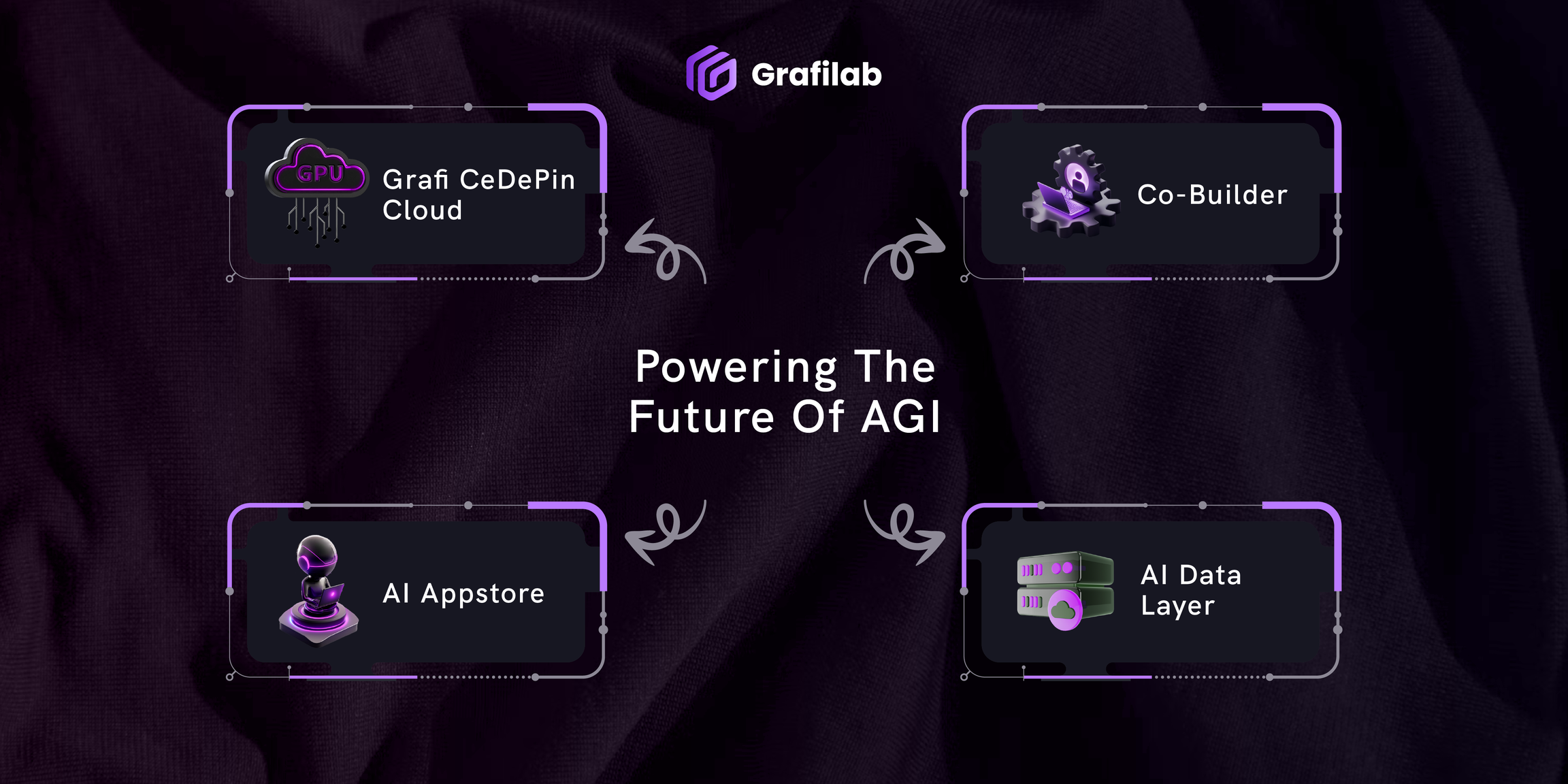

Grafilab is uniquely positioned to address the growing demands of the AI market through its comprehensive suite of decentralized AI products:

- Grafi Cloud: By integrating centralized and decentralized GPU resources, Grafi Cloud ensures cost-effective and scalable computational power to support compute-intensive tasks, from AI training to gaming.

- Grafi Co-Builder: The Co-Builder platform fosters collaboration among developers, offering essential resources for AI apps/agents training, deployment, and project co-creation. This promotes innovation and accelerates the development cycle.

- AI App Store: Grafilab’s AI App Store empowers developers by providing a seamless platform to deploy and monetize AI applications & agents. Users benefit from an extensive catalog of AI tools tailored to diverse industry needs.

- AI Data Layer: The Grafi AI Data Layer collects data from sources like CeDePIN Cloud, Inference API, and AI Apps/Agents within the Grafi ecosystem. It validates, labels, and organizes data into swarms, ensuring high-quality inputs for model fine-tuning. This refined data enhances Grafilab's customized MoE LLM and smaller LLMs, catering to diverse AI needs efficiently.

Grafi Cloud

Get Started

Overview

Grafi Cloud is Grafilab's innovative platform that bridges centralized (CePIN) and decentralized (DePIN) GPU resources, enabling efficient, scalable, and secure solutions for AI, machine learning, and computational workloads.

Core Features

Supply GPU with GRAFI Agent

- For Contributors: Share idle GPU with the Grafi Cloud network and earn rewards.

-

Key Features:

- Cross-Platform Support: Available for both Windows and Ubuntu operating systems, ensuring compatibility with most GPU setups.

- Automated Resource Management: Grafi Agent handles GPU resource allocation, task scheduling, and data exchange without requiring manual intervention.

- Secure Integration: Uses Web3 authentication and smart contracts to ensure secure GPU contribution and revenue sharing.

- Earnings and Incentives: Contributors receive revenue based on GPU performance, uptime, and availability, with an additional $GRAFI token incentive for DePIN contributors.

- Cross-Platform Support: Available for both Windows and Ubuntu operating systems, ensuring compatibility with most GPU setups.

Rent GPU Power with Rent & Run

- For Renters: Access a wide array of GPU resources tailored for tasks like AI model training, gaming, rendering, and data analytics.

-

Key Features:

- Flexible GPU Options: Choose from centralized GPUs in data centers (CePIN) or decentralized GPUs contributed by individuals (DePIN).

- Pre-Configured Environments: Renters can select from Docker templates preloaded with popular AI frameworks like TensorFlow, PyTorch, or OpenCV for faster setup.

- Custom Configurations: Users can upload and run custom Docker images for specialized workflows.

- Real-Time Monitoring: Track GPU performance, task completion status, and resource utilization through a user-friendly dashboard.

- Transparent Billing: Costs are calculated based on GPU usage time, ensuring clear and predictable pricing.

- Flexible GPU Options: Choose from centralized GPUs in data centers (CePIN) or decentralized GPUs contributed by individuals (DePIN).

How It Works

For Contributors (Grafi Agent)

- Download and Install: Install Grafi Agent on your GPU-enabled device via the Grafi Cloud Console.

- Connect to the Network: Authenticate your device using Web3 credentials and connect it to the Grafilab ecosystem.

- Start Earning: Once connected, your GPU is listed for rent. Earnings are calculated based on usage, uptime, and performance metrics.

- Monitor and Optimize: Use the Grafi Cloud Console to track your GPU’s performance, rental history, and earnings in real-time.

For Renters:

- Explore Resources: Browse available GPUs categorized by performance, cost, and location.

- Select GPU: Choose from CePIN or DePIN options based on your task requirements.

- Deploy Tasks: Use pre-configured Docker templates or custom configurations to set up your computational tasks.

- Monitor Progress: Track task performance and GPU metrics through the dashboard.

- Complete and Pay: Payments are processed securely using credit cards or supported cryptocurrencies, with contributors receiving their share directly.

Payment and Incentives

For Renters

- Flexible Payment Options: Pay with credit cards, stablecoin, or $GRAFI tokens.

- No Hidden Fees: Transparent pricing ensures renters know exactly what they’re paying for.

For Contributors

- Revenue Sharing: Contributors receive 90% of rental revenue, with the remaining 10% allocated to Grafilab as platform fee.

- Incentives for DePIN GPU Contributors: DePIN GPU contributors are rewarded with $GRAFI tokens for GPU uptime.

Advanced Features

1. Network Map

- Global GPU Distribution: View active GPUs across the Grafilab ecosystem, contributed by centralized data centers and decentralized users.

- Node Metrics: Click on individual nodes to view performance data, including uptime, utilization rates, and rental activity.

- Real-Time Updates: The map displays live data, allowing renters to make informed decisions when selecting GPUs.

2. On-Chain Transparency

- Indexed Data: All transactions, payments, and resource usage are logged and published on-chain for auditability.

- Secure Interactions: Smart contracts ensure all processes—from GPU rental to contributor payments—are executed securely and transparently.

Grafi Co-Builder

Get Started

The Grafi Co-Builder is a cutting-edge development platform designed to empower AI researchers, startups, and developers. Leveraging Grafilab’s CeDePIN GPU infrastructure, Co-Builder enables seamless deployment, scaling, and monetization of AI apps and agents. Whether building AI applications or agents, Co-Builder provides the tools and resources to bring your vision to life.

Main Services of Co-Builder

1. Inference API

A powerful service enabling developers to integrate their AI applications or agents with fine-tuned Large Language Models (LLMs) powered by Grafi CeDePIN Cloud.

Key Features:

- Plug-and-Play Integration: Easily connect applications to fine tune LLMs and GPUs without backend complexity.

- Real-Time Inference: Provides fast, efficient responses that requiring real-time outputs.

- Scalability: Handles multiple requests and scales as demand grows, ensuring uninterrupted performance.

- Cost-Effective: Pay for only the resources consumed during inference, making it suitable for projects of any size.

- Worry-free GPU Access: Eliminates the need to select specific GPU specifications, offering developers flexibility and scalability for growth.

2. Deploy Your Own Apps/Agents

A comprehensive solution for developers to upload, train, deploy, and showcase their AI apps/agents with minimal effort.

Key Features:

- Flexible Deployment: Build new apps/agents from no-code environments or select references from showcase with your modification.

- Multi-Plugin Support: Compatible with various plugins and tools to accommodate diverse development needs.

- GPU Resource Selection: Choose from centralized CePIN GPUs or decentralized DePIN GPUs for cost and performance optimization.

- Monetization Opportunities: List deployed apps/agents on the AI App Store for rent or sale, earning $GRAFI tokens based on usage.

- Interactive Demo Spaces: Create and share live demonstrations of your models with users and stakeholders.

How It Works

Inference API

- Connect Your Application: Use the Co-Builder interface to link your application or agent with the Inference API.

- LLM Selection: Choose the preferred LLM that suits your apps/agents.

- Real-Time Processing: The API processes data and delivers results with optimized speed and accuracy.

- Monitor Usage: Track performance metrics and adjust settings to optimize for cost and efficiency.

Deploy Your Own Apps/Agents

-

Upload your pre-trained dataset: Developers upload pre-trained dataset or configure new ones within the Co-Builder platform.

-

Select Resources: Choose from centralized or decentralized GPUs for deployment, ensuring scalability and cost efficiency.

-

Train and Scale: Co-Builder handles training and scaling based on performance requirements.

-

Monitor Performance: Use real-time dashboards to oversee training speed, utilization, and outcomes.

-

Monetize: Get listed on the AI App Store for subscription earning rewards for usage.

Supporting Large-Language-Model

Grafi Co-Builder comes equipped with numerous widely used LLM such as

-

Llama 3.2

-

Llama 3.3

-

Qwen 2.5

-

DeepSeek V3 (coming soon)

Supporting Framework

Grafi Co-Builder supports various frameworks to help developers seamlessly deploy and interact with their AI Apps & AI Agents. Two of the most popular templates integrated into the platform are Gradio (AI Apps), ElizaOS (AI Agent) and more, both designed to simplify the process of creating powerful UX for AI Apps/Agents and machine learning applications. These frameworks empower developers to create apps/agents with a flexible no-code environment, providing an intuitive way to showcase and interact with their AI projects.

Gradio Templates

1. What is Gradio?

- Gradio is a Python-based framework that allows developers to quickly build UIs for their machine learning models. It makes it easy for users to interact with AI apps through web-based interfaces, which can be embedded or shared with others.

2. Key Features

- Drag-and-Drop Interfaces: Gradio simplifies the process of creating interactive elements, such as image uploads, audio files, and text inputs. • Real-Time Interaction: Developers can use Gradio to deploy AI apps that allow real-time interaction with users, enabling them to test or explore different model functionalities. • Seamless Integration: Gradio templates integrate smoothly with Co-Builder, allowing developers to deploy AI apps directly from the Grafi Cloud Console.

3. How Gradio Templates are Used in Co-Builder

- Pre-Built UIs: Developers can use pre-built Gradio templates in Co-Builder to quickly deploy a front-end interface for their AI apps. • Customization Options: Co-Builder supports customization of Gradio templates, enabling developers to adjust the layout, inputs, and outputs to fit the unique needs of their AI apps. • Deploy and Share: After deployment, users can share their Gradio app interfaces with others via web links or list them in the AI App Store for others to use.

ElizaOS

1. What is ElizaOS?

- Eliza is a powerful multi-agent simulation framework designed to create, deploy, and manage autonomous AI agents. Built with TypeScript, it provides a flexible and extensible platform for developing intelligent agents that can interact across multiple platforms while maintaining consistent personalities and knowledge.

2. Key Features:

- Multi-Agent Architecture: Deploy and manage multiple unique AI personalities simultaneously

- Character System: Create diverse agents using the character file framework

- Memory Management: Advanced RAG (Retrieval Augmented Generation) system for long-term memory and context awareness

- Platform Integration: Seamless connectivity with Discord, Twitter, and other platforms

3. How ElizaOs Templates are Used in Co-Builder

- Grafi Inference API compatible: Grafi inference API is compatible working with ElizaOS seamlessly.

- Flexible customization: Our inference API gives flexibility customization power for developers to fine tune their agent.

Grafi AI App Store

Get Started

The Grafi AI App Store is a marketplace where AI developers can list, distribute, and monetize their AI applications. It provides an ecosystem for AI startups, researchers, and developers to showcase their innovations, while offering users a simple way to browse, rent, or purchase these applications. This platform helps democratize access to AI technology, offering tools, models, and services that can be integrated into various business processes or research projects.

Key Features of the Grafi AI App Store

1. Listing and Distribution

- Developers can submit their AI agents, applications and models for review and once approved, list them on the AI App Store. The platform provides visibility to both developers and users, allowing contributors to reach a wider audience.

2. AI App Categories:

- The AI App Store offers a range of categories for users to explore, such as:

a. AI Agents: Intelligent, customizable AI agents that provide autonomous functionality, such as task automation, personalized user assistance or data analytics.

b.Machine Learning Models: Pre-trained models ready to be integrated into AI projects.

c. AI Image: Applications for Generative Image.

d. AI Chat: Applications that offer services like AI-based chat bot, natural language processing (NLP), and computer vision.

e. AI Video: Applications for Generative Video.

f. AI Writing: Application that offer services like professional writing or thesis writing.

g. AI Voice: Applications that offer machine learning on a series of voices and mimic it.

h. AI Translate: Applications for real time AI language translation.

3. Monetization for Developers:

- Developers have options to monetize their AI applications through subscriptions, one-time payments, or pay-per-use pricing models. Additionally, developers earn $GRAFI tokens based on app usage and engagement.

4. Payments:

- Payments in the Grafi AI App Store are handled through Telegram wallet (Telegram AI Store) or $GRAFI tokens (web application) or other supported cryptocurrencies.

Grafi AI Data Layer

Get Started

The Data Layer is one of the core components of the Grafilab ecosystem, designed to aggregate, validate, and label. This AI Data Layer is crucial for ensuring privacy, security, and accessibility in the management of computational tasks and AI stats within the Grafi ecosystem.

Key Components of the Data Layer

1. Grafi AI Node:

Coming Soon

2. Data Aggregation:

- The Data Aggregation processes the raw data received from contributors. It organizes and labelling relevant data to data swarm, allowing for secure and immutable data records.

- Our data privacy layer encrypted all data that inflow to our data layer.

How the Data Layer Works

- Data Collection:

- Data is collected from various sources, including CeDePIN Cloud, Inference API, AI Apps/Agents that joined the Grafi ecosystem. This raw data could represent subscription execution details, performance metrics, and user usage statistics.

- Data is collected from various sources, including CeDePIN Cloud, Inference API, AI Apps/Agents that joined the Grafi ecosystem. This raw data could represent subscription execution details, performance metrics, and user usage statistics.

- Aggregation and Validation:

- The data is passed through the Grafi AI Data Layer, which act as data aggregators. These layer validate the accuracy and completeness of the data before it is passed on for further processing. Validators ensure the integrity of the data quality, minimizing errors, analyze trends and tracking user behaviour.

- The data is passed through the Grafi AI Data Layer, which act as data aggregators. These layer validate the accuracy and completeness of the data before it is passed on for further processing. Validators ensure the integrity of the data quality, minimizing errors, analyze trends and tracking user behaviour.

- Data Labeling:

- Once validated, the data pass to our Grafi Data layer, it will be labelled and assigned into data swarm for modeling fine tuning.

- Once validated, the data pass to our Grafi Data layer, it will be labelled and assigned into data swarm for modeling fine tuning.

- Model Fine Tuning:

- High quality data in data swarm will be used to fine tuning Grafilab customised MoE LLM and other smaller LLM to serve different needs.

Ecosystem (Flywheel)

Ecosystem (Flywheel)

Revenue Streams of Grafilab

- Rental Jobs: Renters can choose from centralized (CePIN) or decentralized (DePIN) GPU resources to meet their specific requirements.

- Contributor Job Earnings: Between 50% to 70% of job earnings are distributed to GPU contributors, depending on whether the resources are centralized or decentralized. Earnings are calculated based on factors such as service duration, GPU performance, and package type.

- Use Cases: This service addresses diverse industry demands, including:

- Subscription Plans: Users subscribe to access GPU resources and popular Large AI Models (LLMs) for tasks like inference, natural language processing, and computer vision.

- Contributor Revenue Sharing: GPU contributors receive up to 50% of the API subscription revenue, incentivizing them to provide consistent and reliable computational resources.

- Scalable Revenue: This model ensures ongoing revenue through recurring subscriptions, supporting both Grafilab and its contributors.

- Similar to a Netflix-style offering, users subscribe to access multiple AI apps and agents in a single package.

- Revenue Distribution: Developers earn based on the usage of their AI apps or agents. Apps with higher user traffic receive greater profits.

- Platform Fee: Grafilab collects a 5% platform fee from the subscription revenue, with the remaining earnings distributed proportionally to developers based on app usage metrics.

- Pay-Per-Use Model: Users pay directly for each interaction or usage of the AI app. Grafilab takes a 10% transaction fee for facilitating the process.

- Direct Purchase Model: Users can purchase lifetime access to specific AI apps. Grafilab earns a service fee based on a percentage of the sale value.

Grafi's Universal Payment and Burning Mechanism

- Flexible Payments: Users can pay for services in fiat, USDC, or other supported tokens, while payments are settled in $GRAFI tokens behind the scenes.

- Instant and Secure Transactions: Utilizing crypto and smart contracts, payments are transparent, instant, and eliminate the need for escrow or delayed billing systems.

- Structural $GRAFI Demand:

- Customers pay in fiat or other currencies.

- Contributors and suppliers are compensated in $GRAFI tokens, ensuring consistent token utilization across the platform.

- Dynamic Buyback and Burn: A portion of revenue from all services is used to purchase and burn $GRAFI tokens, reducing the circulating supply.

- Deflationary Pressure: This mechanism decreases token availability, creating long-term value appreciation while dynamically adjusting to market conditions.

Token Info

Token Info

Token Overview

| Token Name | Grafi |

| Token Ticker | $GRAFI |

| Token Standard | SPL20 |

| Total Supply | 2,100,000,000 $GRAFI |

| Network Information | Solana Network |

Token Distribution and Allocations

- Purpose: To incentivize and reward contributors who drive innovation and participation within the Grafilab ecosystem.

- AI Developers: Rewarded for submitting, training, and deploying AI models on the Grafi AI Data Layer and Co-Builder. Their contributions help enhance the quality and diversity of AI applications in the ecosystem.

- Users: Earn rewards for contributing high-quality data, engaging with AI applications, and participating in the Grafi AI App Store and Data Layer. This ensures sustained growth and improved ecosystem dynamics.

- Holders: Incentives for staking and liquidity provision, governance participation, and reward programs on official portal, CEXs, DEXs, or partner platforms.

- Purpose: Dedicated to rewarding contributors who provide GPU compute power.

- Encourages GPU owners and decentralized participants (DePIN contributors) to share their resources, powering AI tasks and enabling decentralized infrastructure growth.

- Promotes the active building and strengthening of Grafilab's decentralized infrastructure, ensuring cost-efficient and scalable computing.

- Purpose: To incentivize participants who run AI Nodes.

- Rewards operators for decentralized data indexing, validation, and publishing on the Grafi AI Data Layer.

- Ensures the integrity and efficiency of the data layer while supporting decentralized AI solutions.

- Purpose: To support the core team and early contributors.

- Provides long-term incentives to ensure sustained commitment and continuous development of the Grafilab ecosystem.

- Recognizes the critical contributions of advisors in shaping strategic growth.

- Purpose: Reserved for the official token sale via the Grafi Public Token Sales Portal and launchpad partners.

- Enables broad access to $GRAFI, ensuring decentralized ownership and ecosystem participation.

- Purpose: To support long-term sustainability and strategic growth.

- Funds research, development, and the evolution of new platform features and initiatives.

- Ensures Grafilab remains at the forefront of AI and decentralized innovation.

- Purpose: To maintain a stable and robust token economy.

- Ensures sufficient liquidity for trading $GRAFI tokens on exchanges, enhancing market stability.

- Funds marketing campaigns to increase

Token Utility

Token Utilities for GRAFI Token:

- Serves as the primary currency for all transactions within the Grafilab ecosystem, including:

- Subscriptions to AI models on the Grafi AI App Store

- GPU rental on Grafi Cloud

- Co-Builder platform services

- Rewards: Users can stake $GRAFI tokens to earn rewards and unlock premium features across the ecosystem.

- Security: Acts as a minimum security deposit for GPU contributors, ensuring accountability, fraud prevention, and the integrity of the DePIN (Decentralized Physical Infrastructure Network).

- Enables token holders to participate in governance:

- Voting on key proposals.

- Submitting new ideas or improvements.

- Participating in bounty programs that shape the future of Grafilab.

- Compute Mining Rewards: GPU providers (CeDePIN contributors) are incentivized with $GRAFI tokens for offering decentralized computational power to the network.

- AI Node Rewards: AI Node Operators earn $GRAFI tokens for validating, indexing, and maintaining the data integrity of the AI Data Layer.

- Ecosystem Incentives: Data providers and AI developers earn $GRAFI by contributing valuable datasets, AI models, or collaborating on Grafilab platforms to enhance ecosystem innovation and growth.

Token Emission

Compute Mining Emission

- Allocation

- Total Compute Mining Allocation: 15% of total $GRAFI supply

- Total Compute Mining Emission: 315,000,000 $GRAFI (i.e., 15% of the entire supply is set aside for rewarding decentralized GPU providers.)

- Rewards Factors

Four key factors determine how these monthly emissions are distributed among individual GPU providers:

i. GPU Model- Higher-performance GPUs (i.e., newer architectures, larger memory, or faster processing) earn a larger portion of rewards.

ii. Uptime Ratio- GPUs that remain online and available for longer durations receive a higher share of rewards.

iii. Bandwidth- Providers offering higher network throughput or more data-transfer capacity qualify for enhanced rewards.

iv. Staking Collateral- GPU providers who stakes more GRAFI tokens gain a multiplier on their compute mining rewards, incentivizing them to align with the network’s long-term success.

3. Emission Schedule

- Total Mining Supply: 315,000,000 $GRAFI

- Monthly Emission Rate: 1% of the 315,000,000 $GRAFI (i.e., 3,150,000 $GRAFI) every month.

First Month- Emission: 3,150,000 $GRAFI (1% of 315,000,000)

Following Months- Emission Each Month: 3,150,000 $GRAFI (1% of 315,000,000)

At a steady rate of 1% of the total mining allocation per month, the full 315,000,000 $GRAFI will be distributed over approximately 100 months (a bit over 8 years).

Roadmap

Q3 2024

- Strategic Partnerships: Signing of Gigabit MOU for long-term collaboration.

- User Growth Initiatives: Launch of Telegram Mini App onboarding campaign targeting 80,000 users, complemented by the Footprint program to onboard 110,000 users.

- Incubation & Acceleration: Inclusion in the prestigious DTC Incubation and Acceleration Programme to strengthen market readiness.

Q4 2024

- GPU Contribution Alpha Launch: Rollout of the alpha phase for GPU contribution to onboard early testers and refine the system.

- Private Sale Round: Onboarding for early investors and strategic partners for project scalability.

- Public Token Sale Preparations: Finalizing groundwork for the $GRAFI token public sale launch.

Q1 2025

- Core Product Development: Beta launch of the GRAFI CeDePIN Cloud and Inference API.

- AI Advancements: Introduction of MoE LLM (Mixture of Experts Large Language Model) and Data Swarm for optimized AI processing and data integration.

- Strategic Partnerships: Collaborations with leading AI Agent Framework providers and AI Agent Launchpads to expand application usability.

- Public Sale Round: Public Sale and Token Generation Event (TGE) followed by prominent exchanges listing.

Q2 2025

- GPU Network Expansion: Scaling CePIN GPU resources and connecting them to long-term contractual demand for stability and scalability.

- Grafi AI App Store Launch: A subscription based marketplace for AI agents, applications, and tools.

- AI Node Launch: Official rollout of AI Nodes to support decentralized data validation, indexing, and publication.

- Onboarding Open source community: More open source framework, tools, plugins will be available on the Co-Builder console.

Q3 2025

- LLM Fine Tuning: To provide more powerful MoE LLM and others LLM, fine tuning using data swarm high quality labeled data.

- Data Layer Rewarding: Data is digital oil, we prioritise every data contributor with high quality input and deserve to be rewarded.

- Ecosystem Incubation: Growing our ecosystem with Grafilab incubation program, hosting hackathon & innovation bounty quest for developers society.